Mac M1 Pro上搭建k8s集群

简介

学习k8s想自己搭一套集群,首先考虑租云主机,算下来vultr或者阿里云按需付费,租三台一个月下来也得600元大洋,有点肉疼,而且最终销毁掉再想玩还得重新搭建太麻烦。所以还是决定用自己的M1 pro来搞,Apple Silicon芯片会有些小坑,已经有一定的心理准备了,下面记录搭建过程。

环境要求

- M1 pro 10C16G 其他Apple Silicon芯片的电脑都可以

- 科学上网(后面有介绍)

- 100G上磁盘空间

搭建目标

- 1台master节点,2台worker节点

- proxy mode使用ipvs

- 用flannel组件搭建网络层

- cgroup驱动使用systemd

准备工作

安装虚拟机

- 清理足够空间,预留100G以上磁盘空间

- 虚拟机

- 支持silicon chip的虚拟机只有UTM(开源免费)和vmware fusion player(个人license免费)

- 我这里使用的UTM https://mac.getutm.app/

安装RockyLinux 9

centos7、8不支持apple silicon芯片,简单说是apple silicon不支持64k分页,详见vmware社区帖子。所以这里我使用的Rocky Linux 9(centos不维护后的社区替代版本,目前社区比较活跃),5.4版本的linux kernel。下载Rocky 9,要选择ARM64版本 minimal就可以。下载地址

UTM安装RockyLinux过程不赘述,可以参考视频 https://www.youtube.com/watch?v=NTOcxlHm_u8。

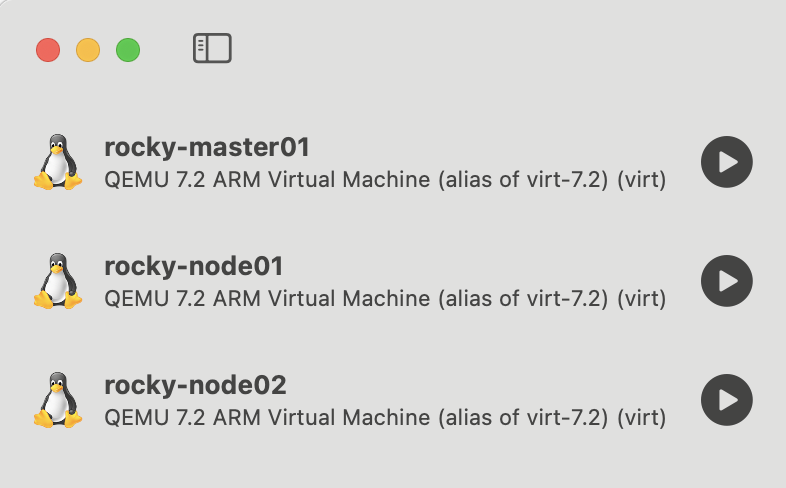

我这里启动了三台虚拟机,master01、node01、node02,配置都是4c4g 100G硬盘。

注意:

- network选共享模式

- 启用root账户,并允许ssh(后续操作方便一些)

科学上网

虽然有一些下载环节可以替换国内镜像源,但是作为程序员标配,还是方便很多。我后面的操作也依赖科学上网。没有梯子的同学推荐这款,用了7、8年了还挺稳。

注意:

- 在虚拟机内,查看master01、node01、node02的ip,记录下来。

- 从宿主机ssh到虚拟机,在虚拟机内

who看下宿主机的ip,记录下来。 - 把梯子软件的“允许局域网连接”的选项开启

安装k8s

修改主机名

三台虚拟机分别修改为

hostnamectl set-hostname k8s-master01hostnamectl set-hostname k8s-node01hostnamectl set-hostname k8s-node02

修改/etc/hosts

在三台机器上绑一下host,在/etc/hosts追加。这里注意,我写的ip是我本地情况,你应该根据实际情况修改。

1

2

3

192.168.64.2 k8s-master01

192.168.64.3 k8s-node01

192.168.64.4 k8s-node02

安装依赖包

1

yum install -y conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

关闭防火墙

1

systemctl stop firewalld && systemctl disable firewalld

清空iptables规则

1

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables && iptables -F && service iptables save

关闭swap

1

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

关闭SELinux

1

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

内核参数优化

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

cat > kubernetes.conf <<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

## net.ipv4.tcp_tw_recycle=0

net.ipv4.tcp_tw_reuse=1

vm.swappiness=0 ## 禁止使用 swap 空间,只有当系统 00M 时才允许使用它

vm.overcommit_memory=1 ## 不检查物理内存是否够用

vm.panic_on_oom=0 #开启 00M

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

net.ipv4.tcp_tw_recycle=0 从4.12内核开始已经被移除了,所以我这里注释掉了详见https://djangocas.dev/blog/troubleshooting-tcp_tw_recycle-no-such-file-or-directory/

拷贝配置

1

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

加载两个网络相关的模块

1

2

modprobe br_netfilter

modprobe ip_conntrack

生效配置

1

sysctl -p /etc/sysctl.d/kubernetes.conf

配置IPVS

1

2

3

4

5

6

7

8

9

10

11

12

mkdir -p /etc/sysconfig/modules/

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

验证

1

lsmod|grep -e ip_vs -e nf_conntrack_ipv4

配置dns

1

echo "nameserver 8.8.8.8" >> /etc/resolv.conf

安装docker

1

2

3

4

5

6

7

8

9

10

11

## 导入阿里云的镜像仓库

sudo dnf config-manager --add-repo=https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

## 安装docker

sudo dnf install -y docker-ce docker-ce-cli docker-compose-plugin

## 启动docker engine

systemctl start docker

## 设置开机自启

systemctl enable docker

修改docker配置

1

2

3

4

5

6

7

8

9

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver":"json-file",

"log-opts": {

"max-size":"100m"

}

}

EOF

docker科学上网

参考 https://note.qidong.name/2020/05/docker-proxy/

1

2

3

4

5

6

7

8

9

sudo mkdir -p /etc/systemd/system/docker.service.d

## 根据自己的梯子实际情况配置下面的内容,192.168.64.1是我宿主机的ip,

cat > /etc/systemd/system/docker.service.d/proxy.conf <<EOF

[Service]

Environment="HTTP_PROXY=http://192.168.64.1:7890/"

Environment="HTTPS_PROXY=http://192.168.64.1:7890/"

Environment="NO_PROXY=localhost,127.0.0.1,.example.com"

EOF

重启docker

1

2

systemctl daemon-reload

systemctl restart docker

重启docker服务

1

systemctl daemon-reload && systemctl restart docker

安装containerd

1

sudo dnf install -y containerd.io

生成containerd配置

1

containerd config default > /etc/containerd/config.toml

修改containerd配置

1

2

3

4

5

6

vim /etc/containerd/config.toml

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2" ## 这个应该默认就是,可以检查一下

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true ## 这里要改成true,我们使用cgroup驱动是systemd

给containerd配置科学上网

1

2

3

4

5

6

7

mkdir -p /etc/systemd/system/containerd.service.d/

cat > /etc/systemd/system/containerd.service.d/http-proxy.conf <<EOF

[Service]

Environment="HTTP_PROXY=http://192.168.64.1:7890"

Environment="HTTPS_PROXY=http://192.168.64.1:7890"

EOF

重启containerd服务

1

systemctl daemon-reload && systemctl restart containerd

安装kubelet kubeadm kubectl

Add repo

1

2

3

4

5

6

7

8

9

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-aarch64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

Install

1

sudo yum install -y kubelet kubeadm kubectl

kubelet开机启动

1

systemctl enable kubelet.service

初始化master01

以下操作在master01进行

1

kubeadm config print init-defaults > kubeadm-config.yaml

修改配置

注意:注释部分需要在修改时删掉

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.64.2 ## 这里改成当前机器的ip

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/containerd/containerd.sock ## 这里修改containerd.sock路径

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io

kind: ClusterConfiguration

kubernetesVersion: 1.28.3 ## 版本号

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16" ## Flannel默认pod网段

serviceSubnet: 10.96.0.0/12

scheduler: {}

--- #下面是新增内容,配置ipvs

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

初始化

1

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

拷贝kubectl配置

1

2

3

4

5

6

7

mkdir -p $HOME/.kube/

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

## check

kubectl get node

kubectl get pod -n kube-system

部署网络

下载flannel资源清单文件,并部署

1

2

3

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl create -f kube-flannel.yml

查看下flannel的状态

1

kubectl get pod -n kube-flannel

加入worker node节点

在node01 和 node02上执行如下命令,这个命令是在kubeadm init成功后输出在控制台的

1

kubeadm join 192.168.64.2:6443 --token abcdef.0123456789abcdef --discovery-token-ca-cert-hash sha256:28829c87136e5bdf1e22f4fcab6636c4d8c9172efe7548b092c31eb99a9e2d47

完成部署

到这里就完成了k8s集群在m1 pro上的搭建,看下最终的状态。

pod状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

[root@k8s-master01 ~]## kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel kube-flannel-ds-hccq4 1/1 Running 0 3h47m 192.168.64.3 k8s-node01 <none> <none>

kube-flannel kube-flannel-ds-lw2cf 1/1 Running 0 3h46m 192.168.64.4 k8s-node02 <none> <none>

kube-flannel kube-flannel-ds-mnxs5 1/1 Running 0 3h54m 192.168.64.2 k8s-master01 <none> <none>

kube-system coredns-5dd5756b68-7z4gg 1/1 Running 0 3h54m 10.244.0.2 k8s-master01 <none> <none>

kube-system coredns-5dd5756b68-ssb4z 1/1 Running 0 3h54m 10.244.0.3 k8s-master01 <none> <none>

kube-system etcd-k8s-master01 1/1 Running 19 3h55m 192.168.64.2 k8s-master01 <none> <none>

kube-system kube-apiserver-k8s-master01 1/1 Running 19 3h55m 192.168.64.2 k8s-master01 <none> <none>

kube-system kube-controller-manager-k8s-master01 1/1 Running 27 3h55m 192.168.64.2 k8s-master01 <none> <none>

kube-system kube-proxy-9jjh5 1/1 Running 0 3h47m 192.168.64.3 k8s-node01 <none> <none>

kube-system kube-proxy-dt6v5 1/1 Running 0 3h46m 192.168.64.4 k8s-node02 <none> <none>

kube-system kube-proxy-qk7jb 1/1 Running 0 3h54m 192.168.64.2 k8s-master01 <none> <none>

kube-system kube-scheduler-k8s-master01 1/1 Running 25 3h55m 192.168.64.2 k8s-master01 <none> <none>

node状态

1

2

3

4

5

[root@k8s-master01 ~]## kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready control-plane 3h56m v1.28.3

k8s-node01 Ready <none> 3h48m v1.28.2

k8s-node02 Ready <none> 3h47m v1.28.2